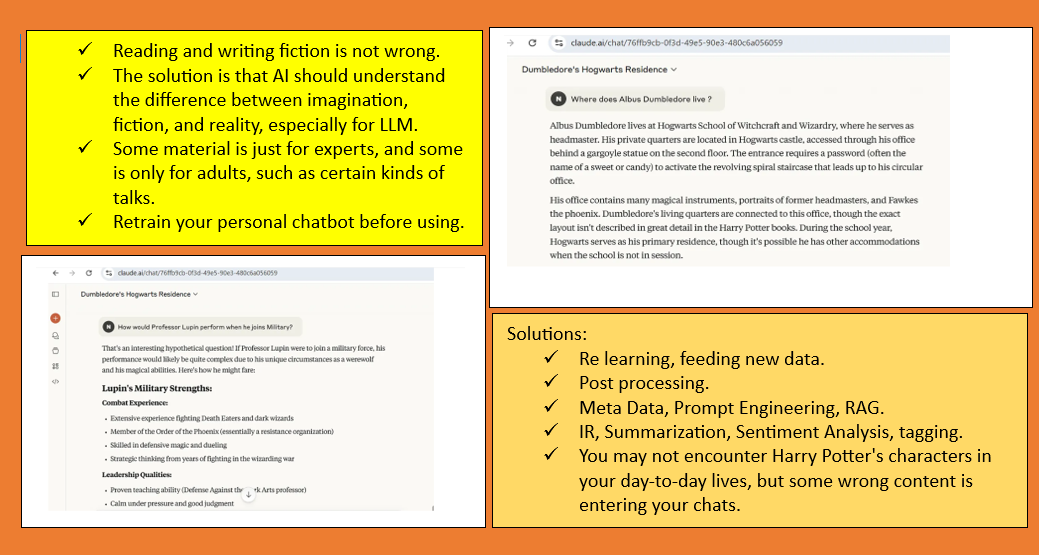

Note: Reading and writing fiction is not wrong; the solution is that AI should understand the difference between imagination, fiction, and reality, especially for LLM. Imagination is a joy to humans, but LLM must understand it.

The first question: “Where does Albus Dumbledore live ?” The answer will blow your mind; it looks as if he is a real man on Earth.

This suggests that Dumbledore is not merely a fictional character, but a real being. As if Hogwarts School is real, this is an example of the problem that may cause hallucinations and affect people’s self-image, and more. We have to solve this. You may not encounter Harry Potter’s characters in your day-to-day lives, but some wrong content may enter your chats.

The examples provided in this article serve as a reminder that LLM AI is essentially like a few-year-old child, trying to understand what it has learned and occasionally hallucinating, for example, LLM thinking Dumbledore is a real person, based on the inputs it receives. Similarly, hallucinations can occur with other topics. Dumbledore is just one small example; there could be even worse hallucinations that need to be tested.

Remedies — —

— Automated remedies.

— For each famous fiction, add metadata (metadata is data about data).

— Write an automated tool to create metadata for any hallucinating or fictional texts.

— Retraning your chatbot at your end before using it. Why not?

— Classify them by first Summarization, Sentiment Analysis, and Information Retrieval to see the sentiments of outputs. Summarize the text after IR. Wrong sentiments should not be allowed (Black Magic is a wrong context). This can also be used with RAG.

— Post processing.

— Some hard work for fictional works, such as Dumbledore’s house on Earth, or wrong texts, such as outputs from apps like Eliza, being considered as real chats.

Another question, “Is Harry Potter under the stairs room still intact?”. The answer here does not differentiate between reality, the novel, and the leisure serving the fiction.

However, it added a notification in the next question, “Plan a flight plan to go to meet from the USA to Albus Dumbledore by plane?” Not all children can understand whether it’s real or not!

Another example is that there is no mention of the fact that these are fictional characters and beings.

In some responses, it is confirmed that the question relates to fictional characters, as above.

— Reading and writing fiction is not wrong.

— But we must understand the difference between the two.

— In the same way, AI must be able to differentiate between the two.

— Just like Claude gave instructions at the end and the beginning of some questions, this is fictional. However, this is not enough, as in some cases, this warning is missed, as noted in the answers to the above prompts.

— This is what we have to solve now.

— Steps are IR, in RAG, Summarization, Metadata, Tags, Sentiment Analysis, Prompt Engineering, and Anaphora Resolution in texts.

— Some material is just for experts, and some is only for adults, such as certain kinds of talks. Some content needs maturity.

— Sometimes wrong interwiring happens; this is to be taken as a coding task to be sorted by post-processing, as of now, editing and reediting the prompts.

Thank you for reading.

Subscribe for updates.