#ai #llm #deeplearning #ml

Here is the video lecture, subscribe for updates,

https://www.youtube.com/watch?v=qDwAm1f0JBY

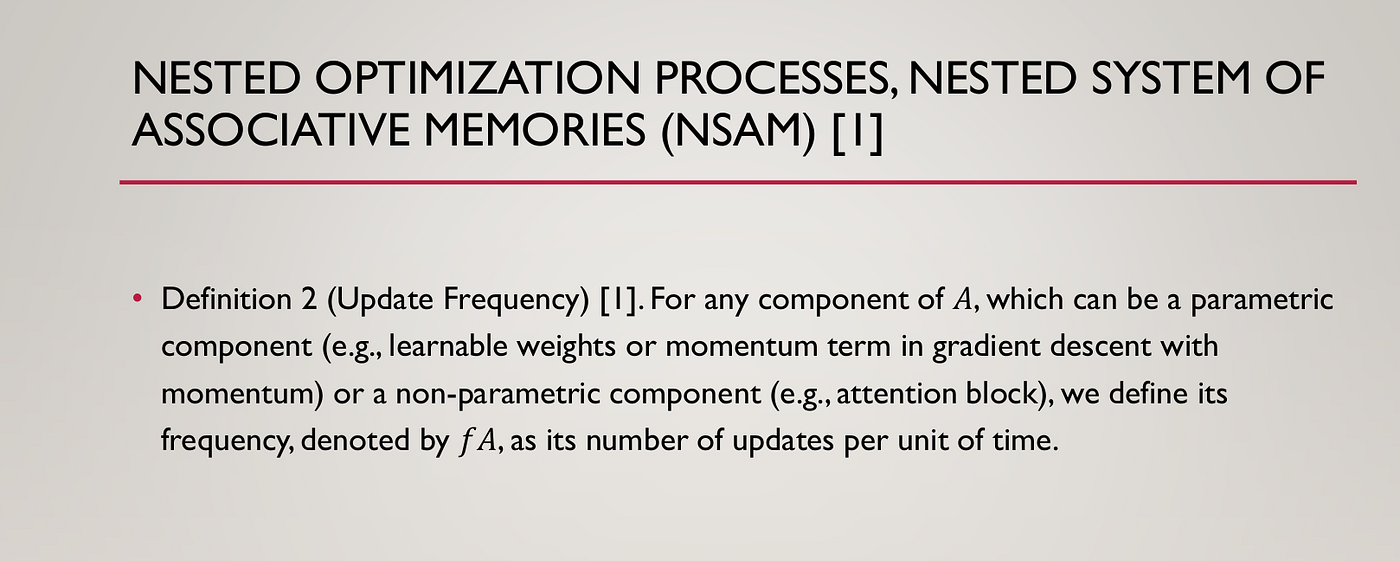

Some concerns, it catches the forgetting behavior of LLMs, the context once gone can be looked into nested memories and hence can be revised if nested parameters and memories are referenced. The pre training can’t be edited but the computation of temporary context can be referred if intact in these layers.

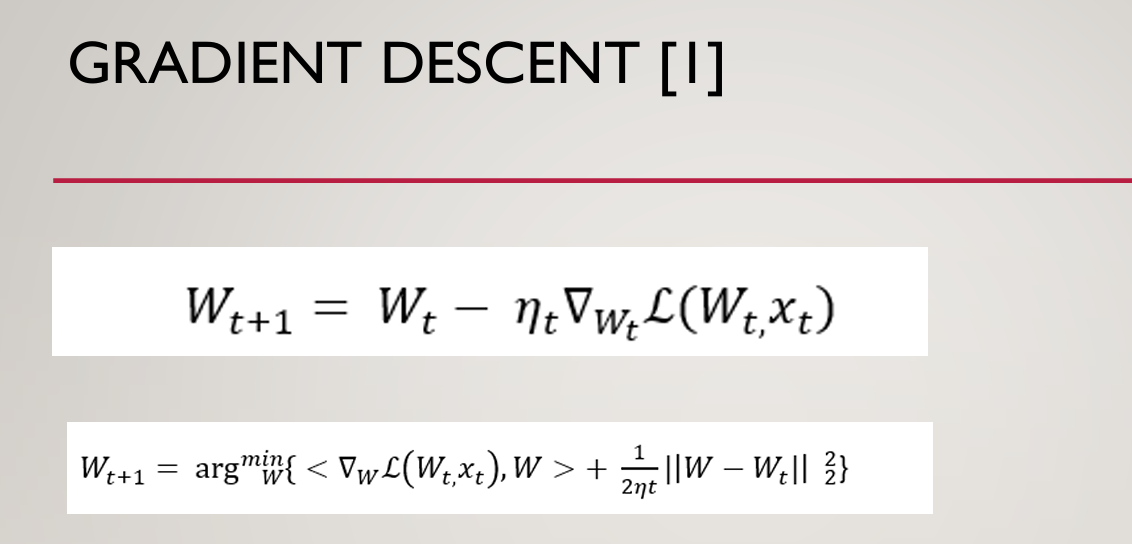

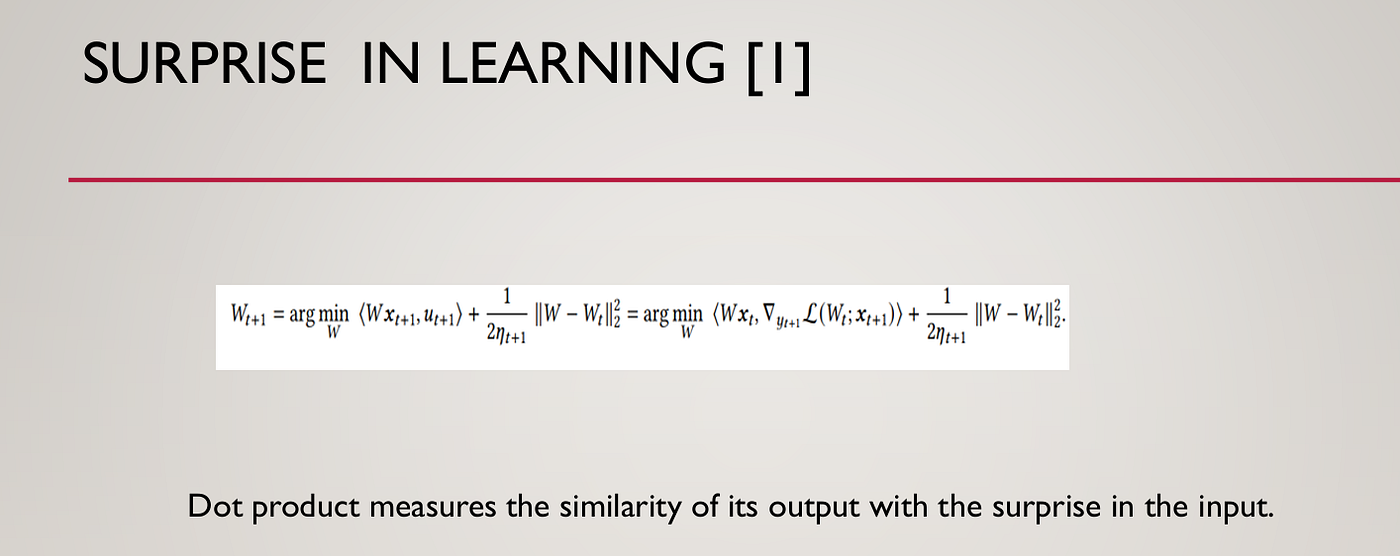

The terminologies and formulas we use are here, are taken from Reference [1].

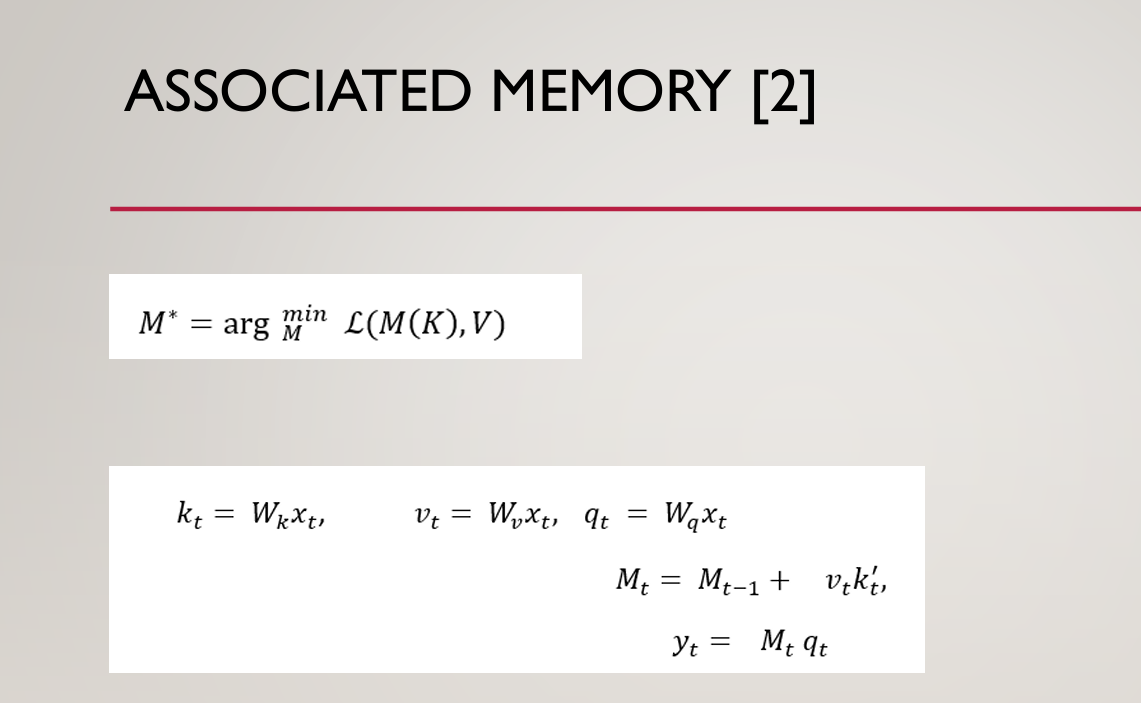

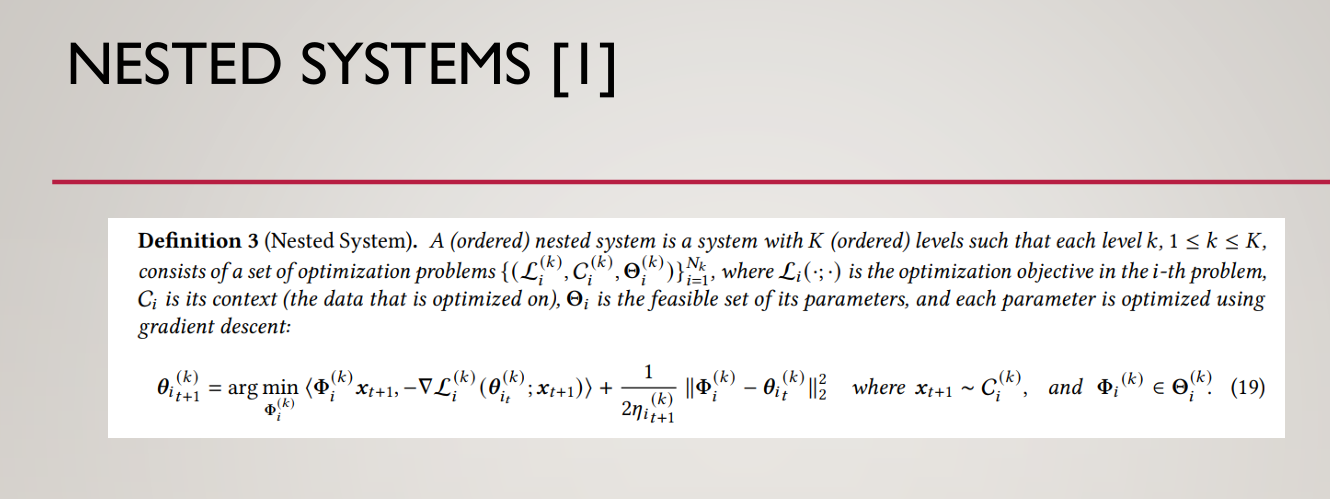

Associated Memories can be defined as follows,

Reference [1]

Reference [1]

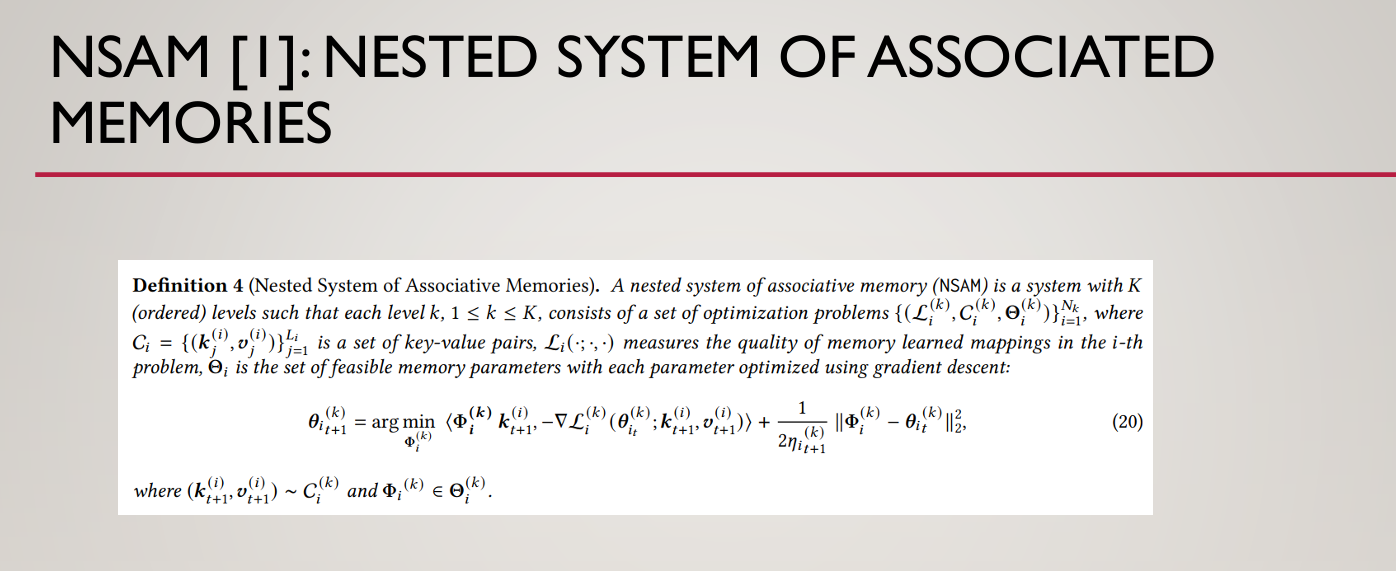

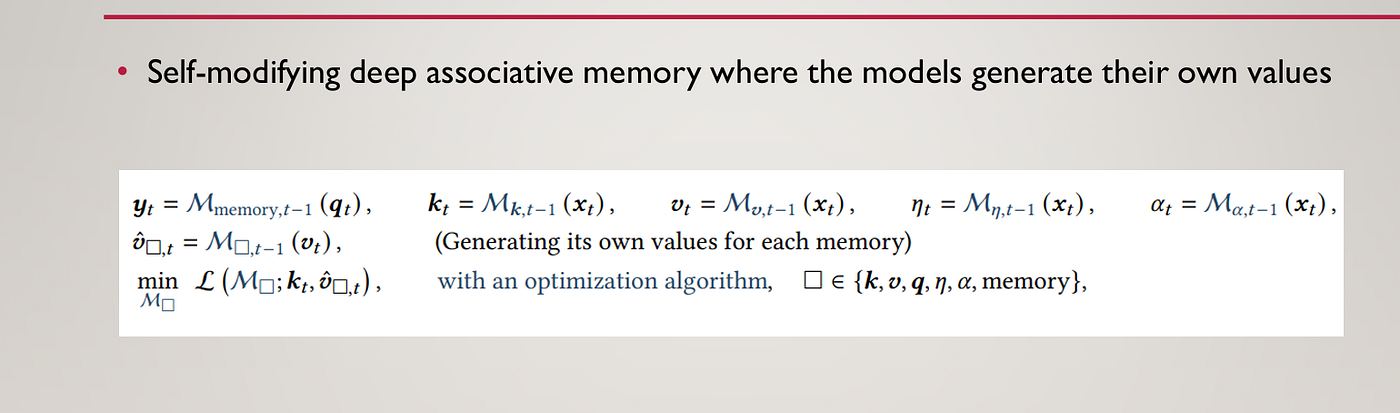

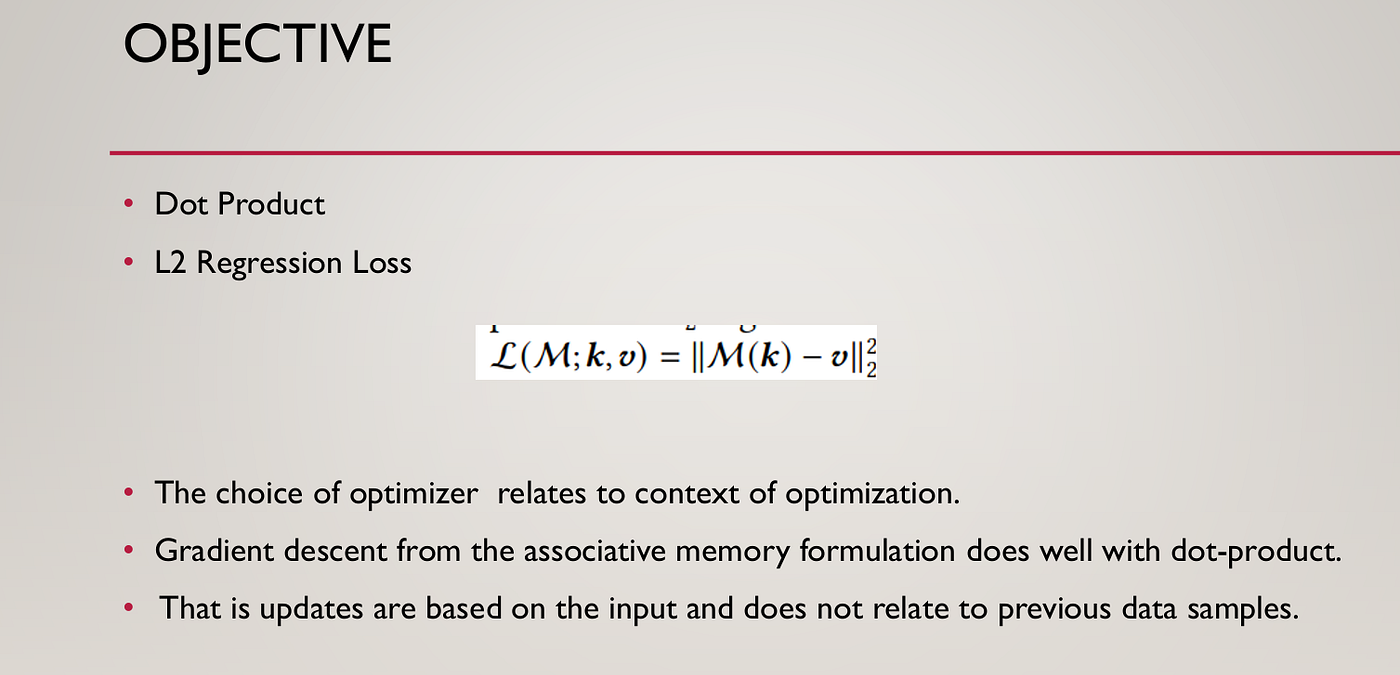

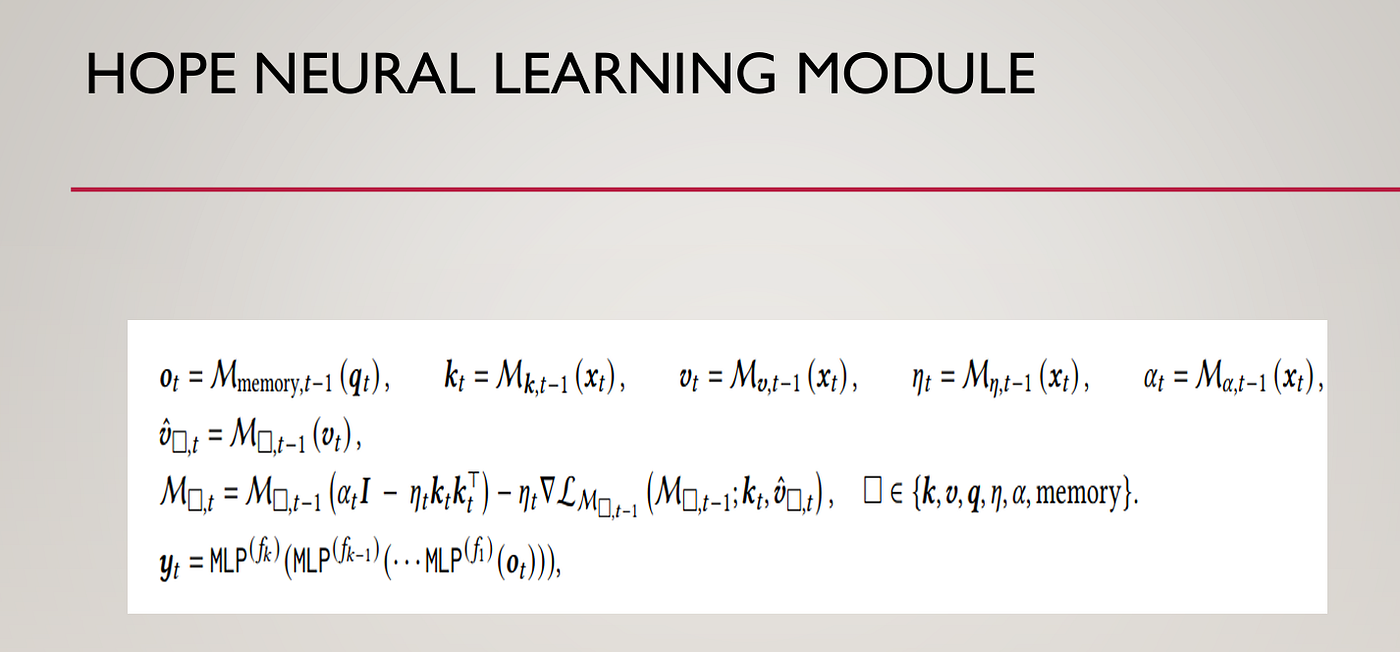

The same thing, but in key-value-based terminologies of associated memories:

Reference [1]

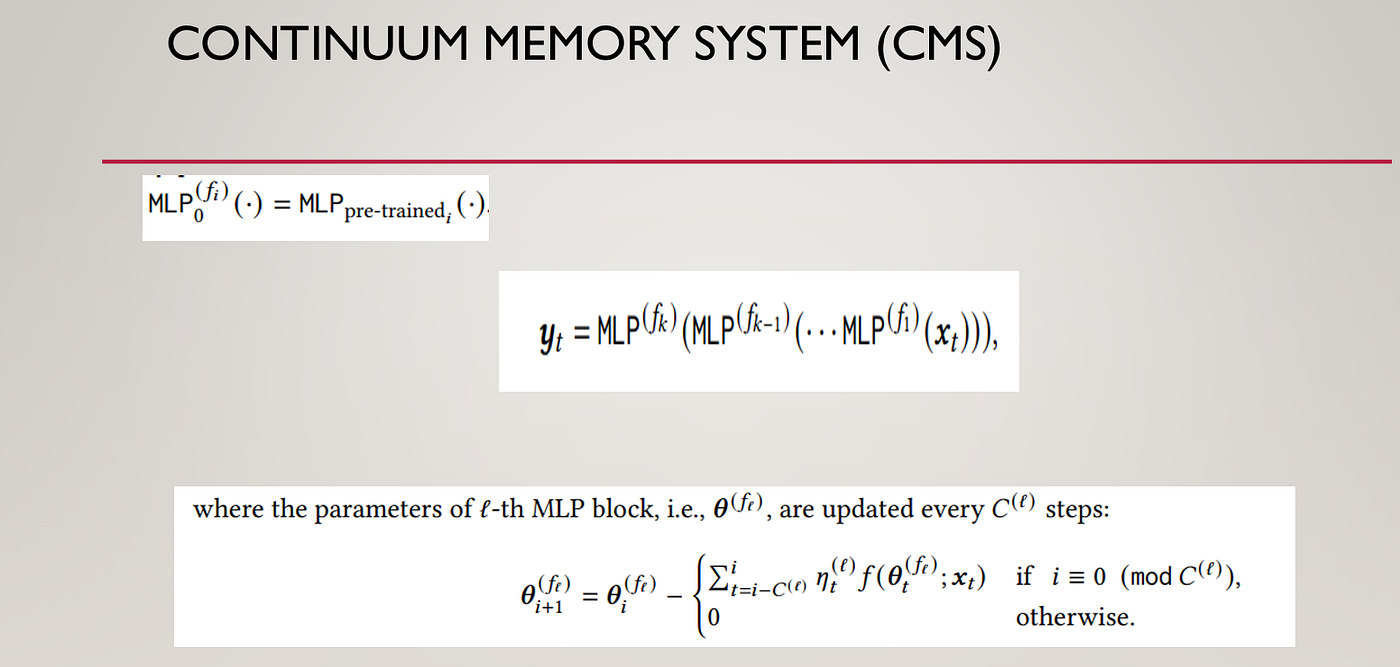

Reference [1]

Reference [1]

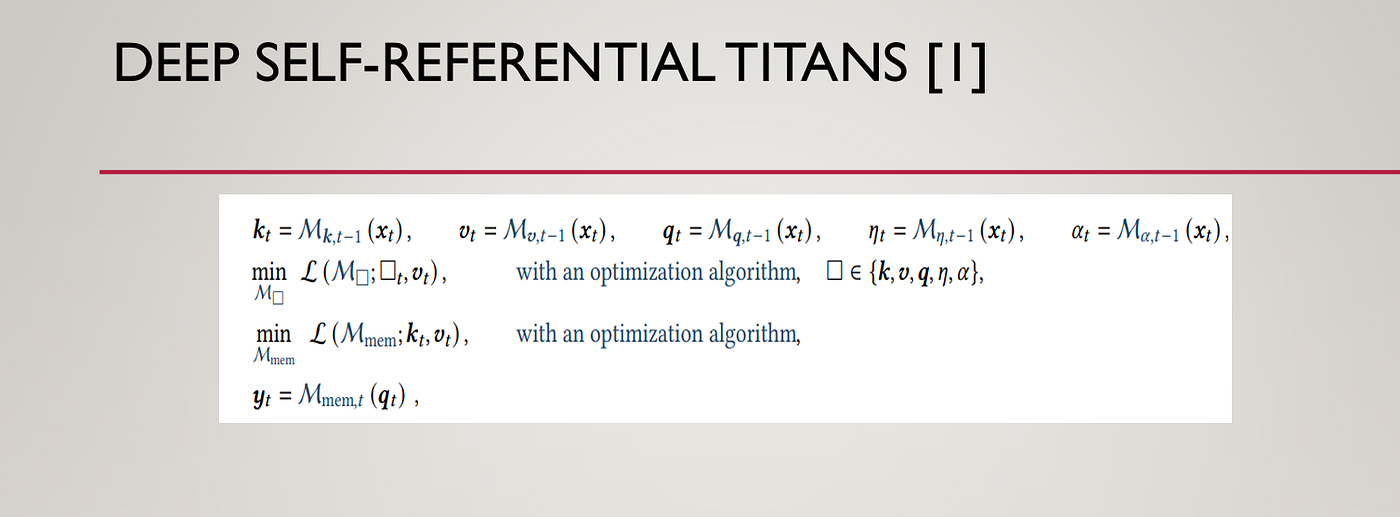

Reference [1]

Reference [1]

Reference [1]

The experimental results are good and have been explained in [1].

References

[1] Behrouz, A., Razaviyayn, M., Zhong, P., & Mirrokni, V. (2025). Nested learning: The illusion of deep learning architectures. In The Thirty-ninth Annual Conference on Neural Information Processing Systems.