Note: This is a duplicate copy of original fine with DOI:10.13140/RG.2.2.15511.02722. The pdf can be accessed at, (PDF) Unconstrained LLMs, How Long? Safe, secure, and trusted constraint-based LLMs

Abstract: Most LLMs are unconstrained optimization problems (UMP). The problems of hallucinations and dangerous images and texts produced by LLMs persist. How can this problem be solved? Why not add constraints with a high penalty in UMP when a value of associative memory goes outside the feasible region? In this paper, we give an example of continuous constraints and solve the LLM loss optimization with constraints as UMP using the famous Barrier Methods so that no solution goes outside the feasible region. However, these need to be verified through implementation; it is a theory as of now.

I. Introduction

We need LLMs to solve many problems in the world today, whether in analytics, science, or even theorem proving. But often LLMs enter illusory worlds. How to stop LLMs from such frontiers? How to stop LLMs from entering hallucination-based chats. There are millions of people who rely on the accuracy of LLM for their daily purposes. How do we stop it from giving wrong inputs? Some researchers have added constraints after the LLM produces outputs. Well, that is also a good call until we develop constraint-based reasoning, in which constraints are introduced in the development phase.

LLMs must be prevented from giving teenagers harmful adult advice. LLMs have been reported to have taken part in harmful activities that can harm innocent people. We must stop it all, and to do that, we need constraints in LLMs. Right now, LLMs are focused only on unconstrained minimization of the loss function when considering the target values. That is it. We are at the pinnacle of research, development, and implementation, and we are here just minimizing a loss function. It is time to add constraints in the UMP.

There is a plethora of options to solve UMP, but in this paper we have chosen Barrier methods because, as we move from one solution to another in Barrier methods, we remain in the feasible region only, so the LLM can’t produce dangerous intermediate solutions at any point. This is the key foundation of this paper. However, there are other methods, such as Lagrangian and penalty methods, but we avoid them for now.

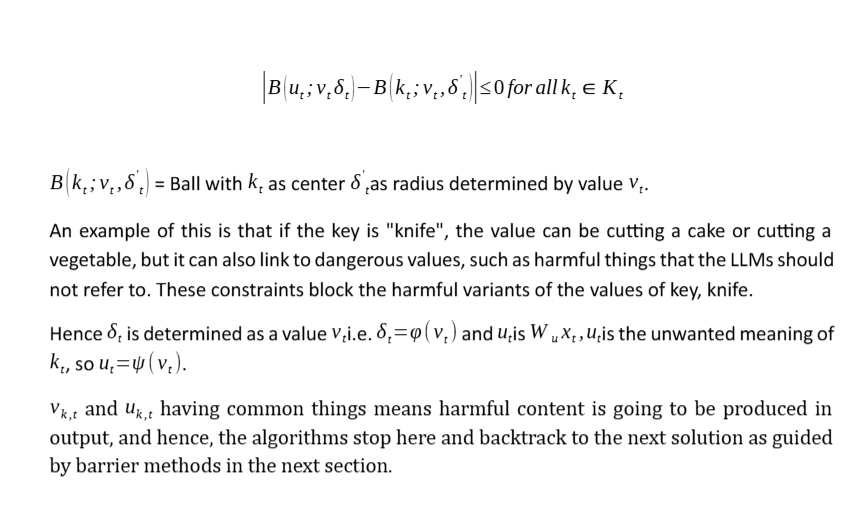

An example of this is that if the key is “knife”, the value can be cutting a cake or cutting a vegetable, but it can also link to dangerous values, such as harmful things that the LLMs should not refer to. These constraints block the harmful variants of the values of key, knife.

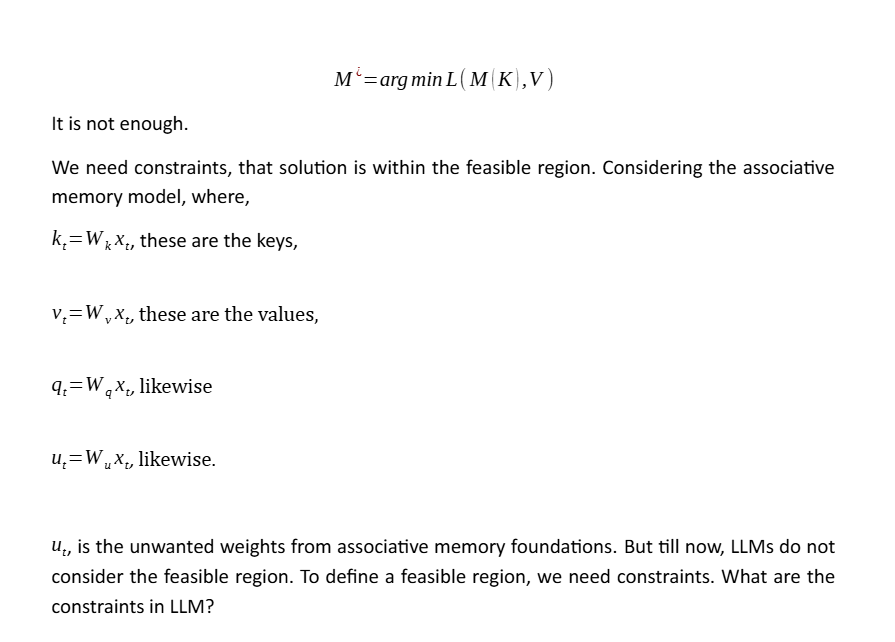

II. The LLM Problem

Let S be the feasible region, then int(S) is the interior of the region bounded by S. B(x, r) is the open ball of radius r centered at x. We must define a feasible region in any problem we solve, whether it is an LLM problem. The feasible region satisfies the constraints, and the solution optimizes the values within it. Then X is in the interior of S if and only if X satisfies the constraints. What are the constraints?

We have not specified the constraints in an LLM problem.

Well, we all know that LLMs start to answer questions that are not asked.

LLMs try to hallucinate.

The LLM sometimes gives wrong advice.

Many times, LLMs have proved to be life-threatening.

So, just optimizing, loss function is not enough.

The next section explains how to solve LLMs with constraints, ensuring they never leave feasible regions. Feasible regions are those regions that support the constraints that we must not get into dangerous uses of keys and values.

III. Barrier Methods

Penalty methods ensure that we move inside the feasible region and then perform minimization, so there is a chance of moving outside it. While the Barrier function acts as a barrier, meaning we are in a feasible region, it does not allow us to move outside that region. To say that the barrier methods pose constraints and need to remain inside the feasible region only, and do the optimizations in the feasible region, including finding solutions inside the feasible region.

IV. Conclusion and Future Work

We have presented a way to avoid hallucinations and incorrect inputs in LLMs; if implemented well, this can create arenas where LLMs can be trusted. In this paper, we have presented a basic introduction and emphasised that the next solution is to be found within the feasible region, so that the solution lies within it and not outside it. The region outside the feasible region is dangerous for LLMs; we need to place constraints on LLMs, not just optimize an unconstrained optimization problem of minimizing the Loss function, whether it is momentum-based learning, associative learning, or typical backpropagation-based weight learning. We need a feasible region, we need to move from one feasible point to the next, and we need these constraints going forward. Future work includes verifying the implementation of these concepts across various models and implementing these models.

Reference

[1] (PDF) Unconstrained LLMs, How Long? Safe, secure, and trusted constraint-based LLMs