Hi All,

Hope you are doing well. Sharing with you pre-print of my paper submitted to a journal. The broad area of the paper is on AI Ethics.

- DOI:10.13140/RG.2.2.17098.38082

- (PDF) Ethical and Responsible AI and Robotics for Children (researchgate.net)

ABSTRACT

Objective: AI ethics matter more for lesser bias, lesser wrong and fake information, and more fairness. But when it comes to children, the definition of AI ethics grows to more than bias and fairness. The approach to AI always needs to be safe, humane, and secure for children. Other concerns of AI Ethics are modern technological inventions in the area of AI where children are exposed to AI unintentionally. All this makes it necessary to bring in methods as well as law books to deal simultaneously with safe AI and robotics for children.

Methods: The paper has covered the problems we have at hand and we discuss this in detail. Certain solutions are presented to deal with four major AI sub-areas. Other areas can be identified and solved for solutions following the guidelines as presented in the paper for wrapper methods for AI for children. Two wrapper-based methods are presented in the paper, these can be enhanced, modified, and implemented on real-time AI softwares.

Results: The article presents the problem at hand, the ethical concern of using AI for children, intentionally or unintentionally. The paper discusses the possible solutions and provides implementation as a wrapper methods around current AI for use by children. The results should benefit the users and the broader audience of children of all age groups.

Scientific novelty: Not much has been discussed in prior works about ethics for AI use by children. This is a novel article in both presentations of problems as well as suggestions on solution implementations. Wrapper methods are not used for the use of AI for children. The novelty is that the wrapper methods can be used on AI for everyone as well. But this may add time complexity of creating solutions.

Practical significance: The article presents the problem children and their parents shall face when more AI gadgets become part of the life of human populations. The article also provides practical ethical viewpoints and solutions for children. These are ready-to-use solutions and can be implemented by any AI and robotic software that intends to add children to their audience. The solution provided can be used on young adults and even adults. These wrapper algorithms for children can be applied to other AI and scientific fields to be used by children as well.

KEYWORDS

Children, Ethics, AI Ethics, Young Adults, AI, Toddlers, Robotics, hacking, ethics, safety, Artificial Intelligence, LLM, Robotics

ARTICLE TEXT

1. Introduction

Artificial intelligence (AI), (Rich et al., 2009, Bartneck et al., 2021) are software’s or machines that can solve intelligence-based problems just like humans do. AI can be defined as a machine or program which is capable of solving some specific categories of intelligence based problems and yielding results in outcomes. The outcomes can be defined by the AI machine or problem as the mainstream of problems that it solves or as a secondary problem that the machine solves, third or more-degree solutions are typically not very important. Here comes the notion of weak AI and strong AI. The week AI solves a thinly covered set of problems and essentially considers only first-degree solutions and implementations. While strong AI focuses on general AI and should be able to solve generic AI problems. Knowingly or unknowingly children of all age groups are exposed to AI and hence it is necessary to track the impacts and work on guidelines for same, to keep children safe and secure from any harmful effects of AI on kids and even young adults.

While Robotics (Bartneck al., 2021) are intelligent machines capable of locomotive abilities to accomplish some tasks. These once equipped with AI (narrow AI or strong AI) can solve many problems and result in outcomes that can astonish humans as well. Robotics can be used in many areas starting from industrial intelligence problems to even spacewalks by robots to help humans in simulating the unseen outcomes of space. Till now robotics is not much used with children given the use is sensitive to many issues. But the future won’t stop the use of AI-based Robots for children. Hence, we must foresee the future and work on guidelines that are necessary for AI-based Robotics for children.

The question of AI Ethics (Bartneck al., 2021, Stahl, 2021) started from academia and now is encouraged in private sector through academia and modern governance. AI and Robotics are here to help humans and this generation of scientists has made a first attempt since years to replicate human brain and human like artificial agents who can do work for humans. These agents can also talk, help and oversee humans. This article is based on AI and Robotics for kids with no physical and psychological harms to children, given children are more vulnerable to minor mistakes in representations, facts, truths and physical harms than adults. This is because children’s minds and body are immature and needs protection from harmful content. Even for wrong content, children’s minds are susceptible to form lasting impressions on their young brains, depending on age groups. All this to say Ethics for children should be studied, analyzed and implemented.

Fake information is another area that needs to be evaluated for children. Given that Large Language Models (Touvron et al, 2023, Liu et al, 2023) rely on internet for its intelligence mechanism and data gathering, to create verbal outputs for the child user, this can lead to many replies which are fake in nature. Hence, this is a big issue and must raise the alarm to improve the mechanism to generate intelligent replies for younger audiences.

Poor quality of training data can affect the outputs in bias, and wrong contents. These things adult users can handle, but the same things children of different age groups can find difficult to handle. All this makes it mandatory to scrutinize and rationalize the outputs that are presented to children if required. All this is needed as, the child does not know what harmful content or bias means and hence can learn wrong things just because AI taught them this way. No, all this need to be tackled to provide AI to young children and teenagers. This is doable and certain steps are described in this paper.

There are various areas in which children’s content can be developed, some are, learning, environment, children’s games, and activities. The machines made for children should reject answering complex questions on social and political fronts unless the children are in their teen years. This is a huge research area that just needs to put on its foundation stones. More on this is discussed in the following sections.

The motivation of the paper is based on increasingly available AI and Robotics which are under the reach of children nowadays. This can be viewed as a problem or can be viewed as a solution. Thus, both sides of the same coin of AI and Robotics, need to be accessed. AI is available to all apps on phones and tablets, while Robotics is growing in its age. Hence, let’s study the amalgamation of both AI and intelligent Robotics from the perspective of helping young children and their parents. Given the hectic workloads, even parents would be happy in case the AI with responsible AI and Robotics the aim this would set on disciplined, intelligent and mature young adults. As the world is progressing in AI and Robotics, a question comes that is “Are our kids ready for AI and Robotics?” This article talks about these issues, if so, then how, and if not then when?

The paper is organized as follows. Section 2 discuss AI systems, problems and solutions for children. Section 3 introduces wrapper-based solutions to AI for ethical AI to work. In Section 4, generic solutions are presented and Section 5 presents the conclusion.

2. AI Systems, Problems, and solutions

AI systems can be any of the available software and toolkits that are available online and in the market. If unchecked children have access to apps, websites, and softwares which are not safe for children’s use. In this case, the same softwares can be used but with some modification which first identifies the age of the child accessing the application and adds the constraints. The systems can be divided on the basis of the kind of AI application or the kind of harm it may cause to the child.

In case we partition the problems in terms of the kind of AI system that needs to be addressed then the following kinds of key AI systems need to be improved on.

1. Natural Language Processing Applications

2. Image Processing Applications

3. Audio Applications

4. Recommender Systems

In each of the above following issues can arise:

1. Fake Information

2. Wrong Information

3. Inappropriate Information

4. Biased Information

There are many kinds of bias that may occur while dealing with Artificial Intelligence. Bias of any kind can be harmful to a young person; this can leave a footprint in his memory for lifelong. It all depends on what kind of bias the AI system is producing and what wrong information is presented to a child. These can be from educational content the child is reading or from demo a robot is presenting to the child. This paper focus more on AI solutions rather than robotic system, as robotic systems have an altogether different level of safety protocols than just an AI system. The seed of bias once put in a child can grow with the child and hence leading to more problems as the child grows to an adult.

The following sections discuss in detail each of the above given AI applications and consider some of the issues in short with examples.

3. Ethical Solutions to AI Problems for Children

Huge data is available on internet this has led to rethink the way ethical judgments are performed to make this data available to children. In case of Robotics and AI for children, it becomes even more important to look at AI for children. This is because children are more prone to receive harmful and hateful messages as threats and it can hurt the child. On top of that children can respond to biased messages to their brain as a permanent mark, given their age and tenderness of brain and mental faculties. Further, given all kinds of videos available on the internet, robotics should refer only to reputed and verified videos in order to process a task.

3.1 NLP Issues and Solutions

A key technique that is primarily used on internet as of today is use of AI-based Large Language Models. The Large Language Models (LLM), e.g., Touvron et al. (2023) and Liu et al. (2023) are popularly used these days in chatbots to many other works and models being used in search engines, some in use without any simple approval from the end user. LLMs are trained on huge data of the internet and hence carry not only the right information but some problems of fake information as well, all this along the useful information. Once learned, there is no simple unlearning, however, we presented below in Fig. 1, how to use the LLMs-based wrapper for children.

The LLM’s are being put to use without any implications. This is ok, as long as adults are using the technological advancements. But what about children, young adults and teenagers using the same platform. What impact would LLMs based technologies have on this segment of human populations?

Well, the problem has already germinated on the internet. This can lead to fake information as well as other problems that are not right to be presented to a child. The problem was the LLMs are trained on the internet which has misinformation as well as bias and is often judgemental as well. This makes the outputs generated from the LLMs to behave in this way as well. Hence, here we propose a solution to the issue as follows. An algorithmic fix for an algorithmic problem by shielding the worse parts with a wrapper.

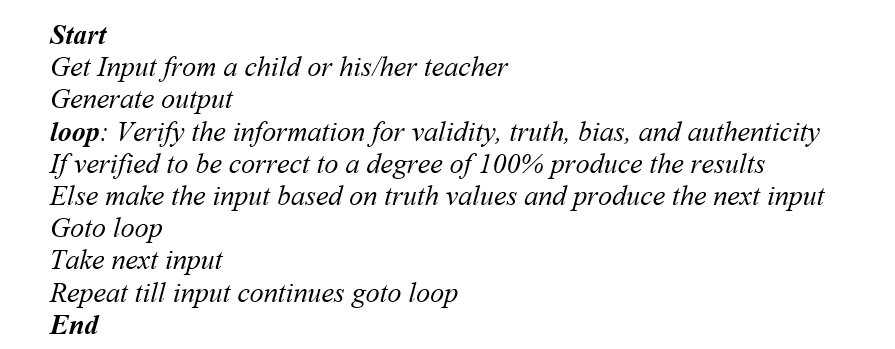

Algorithm code to test the validity of a piece of information to be sent to a child:

Fig 1. Corrective steps for LLMs outputs for children

This algorithm given in Fig. 1. shall make sure that the right information reaches the child who is using AI. The algorithm works on the input child queried to an AI machine. Once the input produces the output, the output is presented to the database of truth values, such as a search engine to verify the validity of the output generated by the LLM engine. The output is edited to maintain only truth and this new output is sent back using the loop to where it is edited and is tested for truth values again. This is continued till only truth values are maintained in the textual inputs. Why do we need a loop in this algorithm? Well, the answer is the fact that anything generated by AI as a textual or image data needs to be verified. This makes sure that the chance of fake information that reaches out of the system is null. This should be the condition for it to be used by youngsters so that there is no margin of error. However, the perfect system is far from being realized yet.

Next NLP NLP-based software is a recommendation system for children, several constraints need to be followed for recommendation systems as well. Many other NLP toolkits such as machine translation and information retrieval require similar manipulations. However, the most destructive NLP tools are LLMs and recommendation systems.

3.2. Image Processing

Wrong information can be generated by some AI image-processing algorithms (Uesugi, 2023, Rehman et al., 2023) as well. It’s not all time that children shall use Natural Language Processing toolkits such as LLM’s, there are times AI algorithms and robots use image processing-based outputs as well. For example, consider a child, wanting to complete a holiday homework, wants to present certain things on banks. The AI asks the child, what bank he wants, being a child, he says “the bank for children home work”. And the AI system output to the child a river bank, while the child was given a homework on financial bank. This makes an impression on child’s mind that banks are where rivers are. However, instead of making the child aware the AI and robotics confused him/her. Such use cases need to be elaborated and corrected for children use. A similar algorithm as in Fig 1 can be introduced to solve image processing applications.

Other image processing applications that can be harmful to children are edited videos, which are fabricated with the help of AI, software that can use a child’s image itself in a wrong or false context, other applications are search tools as in the example above which needs to be corrected for children.

3.3. Audio data

We covered text, then images now consider audio data for use of children. Take the use case, where in a child wants to listen his/her favourite song. The child speaks to the AI-enabled device “play my music”. The device was used by the sibling of child as well. The sibling was in teen age and the child was much younger. The presentation of information from the AI-based device could be harmful for the younger child, as the teenagers listen to entirely different set of songs, podcasts, and other audio files. The possible solution to this problem can be based on a voice recognition system. Fig. 2. presents a possible algorithmic solution to the problem.

Fig. 2. Corrective steps for shared AI-based audio system access by children

This is a simple wrapper for AI-based audio file access by children. In the example above the other user was a teenager, however, the other users can be many and can be adults at the same time. Hence, the audio files need to be scrutinized for its access as well.

The next section discusses generic solutions to AI problems for use by children.

4. Generic Solutions

Here, in this section, we present a generic approach to deal with children and the solutions that follow. There are various areas in which children’s content can be developed, some are, learning environment, children’s games, and others can include activities. Kids are using AI on their parent’s phones unconsciously. So many kids in metros know how to ask Alexa to play their favourite songs and rhymes. That means AI is already exposed to kids but how much is safe AI for kids? And how much AI will be safe AI once this is put in robotics for kids? Robotics for kids is not used as of now. But just like kids end up using AI-based phones of their parents in the same way we may never know when robotics enters our lives and when kids may unconsciously start using it. Hence, it’s better to start earlier and cause less harm to children when they start to use robotics. Further, it would improve the existing AI children are using over their parent’s phones for sure.

To form the generic solution, it includes the following questions:

1. Are AI and Robotics safe for children?

2. Are AI and Robotics essential for children?

3. Do these technologies improve cognitive behaviors in kids?

4. What age is right to introduce AI and Robotics to kids?

5. If children already use AI and Robotics, how to make it safe for them?

6. What are the features available for children on AI and Robotics Apps Panels?

The answers that support these questions can be as follows:

1. These applications may improve the child’s learning abilities.

2. These applications can make learning a fun activity.

3. It adds to leisure time for children, in the absence of parents.

4. This can help in coping up with pressure form peer and school.

AI and robotics can be beneficial for parents who don’t send their children to school so early. Their children can learn more than school with the presence of their parents with toolkits of AI and fun when used with the right care and right precautions. With the AI embedded in robotic toys such as a soft toy with AI tools, children can learn many things such as comprehension, maths, and even subjects like geography. The soft toy-based robots can learn the direction in which the child is growing and present solutions to the child. To be careful the robot should not hurt the child physically and otherwise, that needs to make sure the body of the robot is non-harm to the child.

Yadav (2023) first proposed an age-based solution for use of AI by children wherein the age is automatically detected by the software to be used in children’s devices or toys. The toy or device should not hurt the child physically, emotionally, or mentally. Further, the AI-based software in an intelligent football or a soft robot shall take feedback from the user (children here). This system of feedback should improve the working of AI systems as well as benefit the end user making it an interesting and fun activity for younger ones. The modified version of the age-based division of use of AI by Yadav (2023) is as follows:

1. 0–1 years. No robotic toys. Some smart musical assistants can be kept far away from children.

2. 1–2 years. AI devices for play and learn basis.

3. 2–5 years. With the help of elders AI and Robotics can be accessed. AI wrapper methods should be used in order to avoid any wrong impact

4. 5–10. Learning with AI and Robotics can start in this age group. Again, the wrapping up of AI solutions for authenticity and use should be noted.

5. 10 and above. This age group can be made aware of bigger AI outside of wrapper-based methods.

As stated by Yadav (2023) these are not the final division of the use of robotic toys in different age groups. The final division needs to be based on many more studies and experimentation with logic and feedback on real-time learning systems. The real-time learning system needs to have a stop button as well, which can be put to stop mode when needed. Further licenses for the use of children should be granted with care and concern. Rigorous testing needs to be conducted for children’s AI bots. There are no such prevalent guidelines as of now, but they shall be ready with the right efforts in the right direction.

The implementation of ethics basically lies in the hands of the policymakers in the co-operate sector as well as the policymakers in the government sector. With this in mind, solutions can be developed.

5. Conclusions and Future Work

In this paper, we analyzed the kinds of AI problems and solutions and what are the ethical solutions to these AI problems for children. It is not ethical to provide raw AI solutions to children rather we need wrapper functions to edit the content for children. Some of these wrapper methods are provided in the paper more are proposed and left to the user to propose.

More of future work involves testing and detecting the side effects of AI and Robotics on children. This is an ongoing process as is the development of new AI software. The AI and Robotics for children should be monitored for some substantial amount of time for robustness and accuracy of results.

The future work of this article lays emphasis on the need for a law book for any AI to be used by children. AI devices and robots occupy space like humans in society and hence becomes part of the law books. AI devices should not change the decision-making of a child, rather they should complement the choices a child makes. All this to say every person and hence child has their own unique choices to follow. AI should not erase it and make the best out of children if ever it is used by a child. Adults can still deal with AI, as they are doing at present. The future work involves actually implementing the wrapper functions for children over existing AI and robotic algorithms.

References

1. Bartneck C. et al., An Introduction to Ethics in Robotics and AI, SpringerBriefs in Ethics, 2021, https://doi.org/10.1007/978-3-030-51110-4_2

2. Bishop, C. M., & Nasrabadi, N. M. (2006). Pattern recognition and machine learning (Vol. 4, №4, p. 738). New York: springer.

3. Brooks, R. A. (1991). New approaches to robotics. Science, 253(5025), 1227–1232.

4. Brooks, R. (2003). Flesh and machines: How robots will change us. Vintage.

5. Critchlow, A. J. (1985). Introduction to robotics.

6. de Kleijn, M., Siebert, M., & Huggett, S. (2017). Artificial Intelligence: How knowledge is created, transferred and used.

7. Duda, R. O., & Hart, P. E. (2006). Pattern classification. John Wiley & Sons.

8. Duan, Y., Edwards, J. S., & Dwivedi, Y. K. (2019). Artificial intelligence for decision making in the era of Big Data–evolution, challenges, and research agenda. International journal of information management, 48, 63–71.

9. EGE (2018) European Group on Ethics in Science and New Technologies: Statement on artificial intelligence, robotics and “autonomous” systems. European Commission, Brussels. https://doi.org/10.2777/531856

10. Gallese Nobile, C. (2023). Regulating Smart Robots and Artificial Intelligence in the European Union. Journal of Digital Technologies and Law, 1(1), 33–61. https://doi.org/10.21202/jdtl.2023.2

11. Glaser, J. I., Benjamin, A. S., Farhoodi, R., & Kording, K. P. (2019). The roles of supervised machine learning in systems neuroscience. Progress in neurobiology, 175, 126–137.

12. Joachim von Braun, Margaret S. Archer, Gregory M. Reichberg, and Marcelo Sánchez Sorond. AI, Robotics, and Humanity: Opportunities, Risks, and Implications for Ethics and Policy. J. von Braun et al. (eds.), Robotics, AI, and Humanity, https://doi.org/10.1007/978-3-030-54173-6_1

13. Leeson, P. T., & Coyne, C. J. (2005). The economics of computer hacking. JL Econ. & Pol’y, 1, 511.

14. Liu, B., Jiang, Y., Zhang, X., Liu, Q., Zhang, S., Biswas, J., & Stone, P. (2023). Llm+ p: Empowering large language models with optimal planning proficiency. arXiv preprint arXiv:2304.11477.

15. Mueller, V. C. (2012). Introduction: philosophy and theory of artificial intelligence. Minds and Machines, 22(2), 67–69.

16. Murphy, R. R. (2019). Introduction to AI robotics. MIT press.

17. Rehman, A. U., Khan, Y., Ahmed, R. U., Ullah, N., & Butt, M. A. (2023). Human tracking robotic camera based on image processing for live streaming of conferences and seminars. Heliyon.

18. Rich, E., Knight, K., & Nair, S. (2009). Artificial Intelligence. Tata McGraw Hill.

19. Schank, R. C. (1991). Where’s the AI?. AI magazine, 12(4), 38–38.

20. Stahl, B. C., & Wright, D. (2018). Ethics and privacy in AI and big data: Implementing responsible research and innovation. IEEE Security & Privacy, 16(3), 26–33.

21. Touvron, H., Martin, L., Stone, K., Albert, P., Almahairi, A., Babaei, Y., … & Scialom, T. (2023). Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288.

22. Turing, A. M. (2009). Computing machinery and intelligence (pp. 23–65). Springer Netherlands.

23. Uesugi, F. (2023). Novel image processing method inspired by wavelet transform. Micron, 168, 103442.

24. Yadav, N. Robots and AI for kids: AI and Robotics Ethics. 2023. (https://nidhikayadav.com/2023/07/17/robots-and-ai-for-kids-ai-and-robotics-ethics/)