Note: The author does not know how many government or private company employees’ chats were exposed, it is future that we must secure ourself from. This incident may have caused more harm if it had exposed the organization’s work data, and the remedies are discussed here.

Recently, 300 chats from Grok users have been leaked online. What does this mean, who could be affected, and what if more data is compromised? How should we respond to it and prevent future leaks?

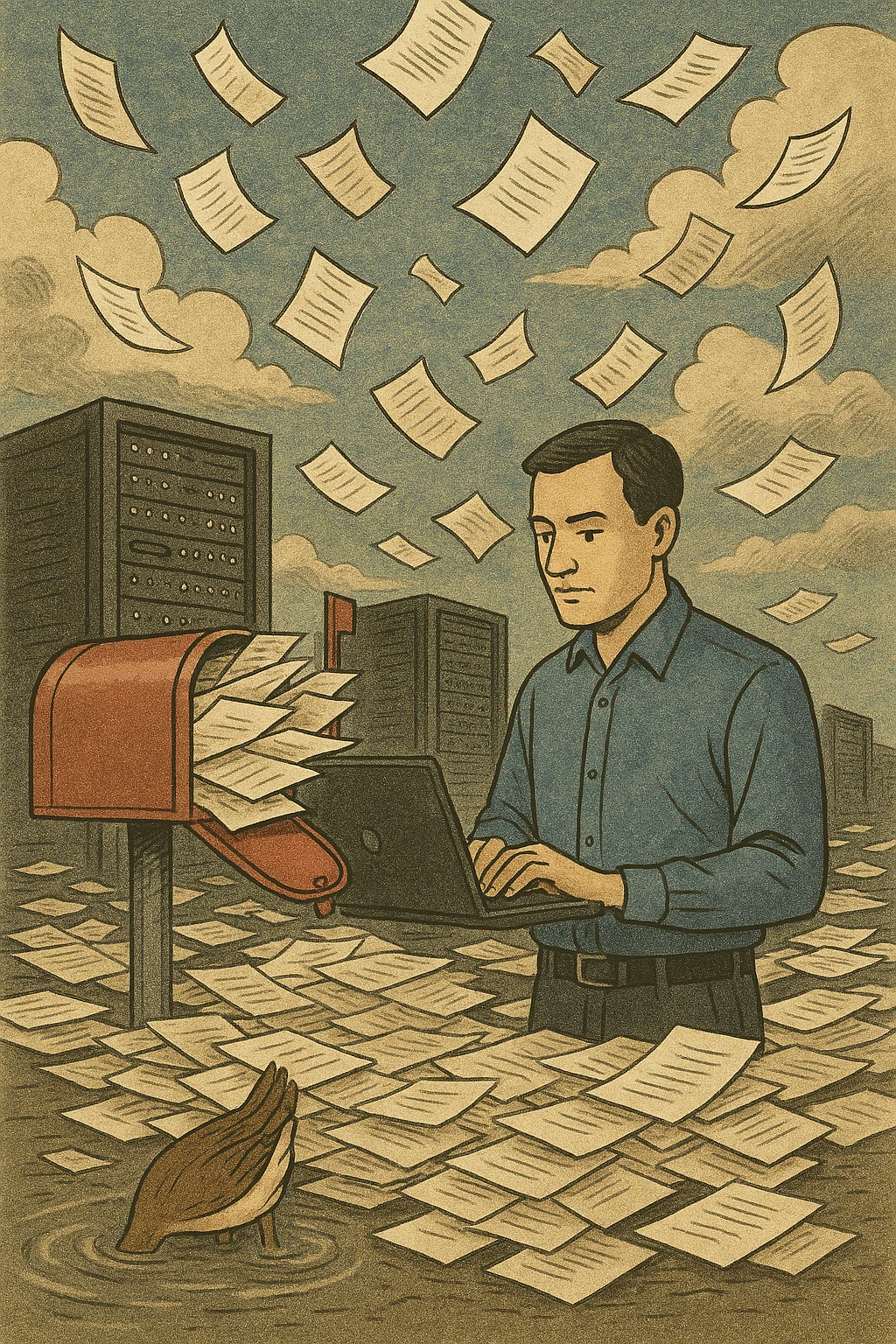

It’s like 18th century letters going to the post office but being read out loud by postmasters; whoever hears them gets the private message. Isn’t it?

How might it have harmed government and private companies that rely on chatbot consulting? How can we stay safe in the future — personally, as a company, or as an organization? Read here.

Summary

What is it?

— This is more than AI ethics

— Privacy Concerns for people, companies, and government

— Security concerns for government and mission-critical private jobs.

— Its about work security, processes, and more

— Its like data theft? If an employee’s data from a company gets leaked, it can cause a lot of trouble.

— Many employees use chatbots for analysis, questioning, consulting helpdesk and performing analytics.

— Grok’s hundreds of thousands of chats are now accessible on Google. Earlier, similar issues were with Meta and some with OpenAI as well.

— How many leaked chats belonged to people, how many to were related to companies and government employees?

— Organizational work exposure is also an issue, given that a lot of organizations use these apps these days.

— Government and Private Companies ‘ mission-critical confidential work is under access by non-stakeholders.

Sensitivity about

— A person’s/organization’s privacy

— A company’s way of working exposed means its basic protocols are exposed

— His/her profession, which area of work is being referred to in an online chatbot exposed

— Values may be leaked

— Persona may be leaked

— Private Affiliations may be leaked

— Beliefs of kinds may be leaked

— Chat privacy is as important as profile privacy

— Personal data can be correlated if exposed

How to deal with the mess now? And how to deal with it in the future?

— Encrypt the exposed or future data.

— Tell users to download the data (send emails) and delete it permanently.

— Load saved data again when needed.

— Share the link to be more secure in the future. How much more secure is another question.

— In the future, local system downloads will outperform saving data on the internet for some critical tasks. This means desktop applications or applications on your own data centers.

– Ask the user if they want to permanently delete a chat thread for being critical.

— Saving on the internet helps build an AI profile of a person or organization, including their taste on an issue and more, so the share option provides easy future handling.

— So, a secure sharing method must with end-to-end encryption implemented.

Thank you for reading.

Subscribe my newsletters.

Best Regards,

….

Photo Credit: AI, DALL-E